Assembler: Scalable 3D Part Assembly via Anchor Point Diffusion

Wang Zhao1,

Yan-Pei Cao2,

Jiale Xu1,

Yuejiang Dong1,3,

Ying Shan1

1ARC Lab, Tencent PCG 2VAST 3Tsinghua University

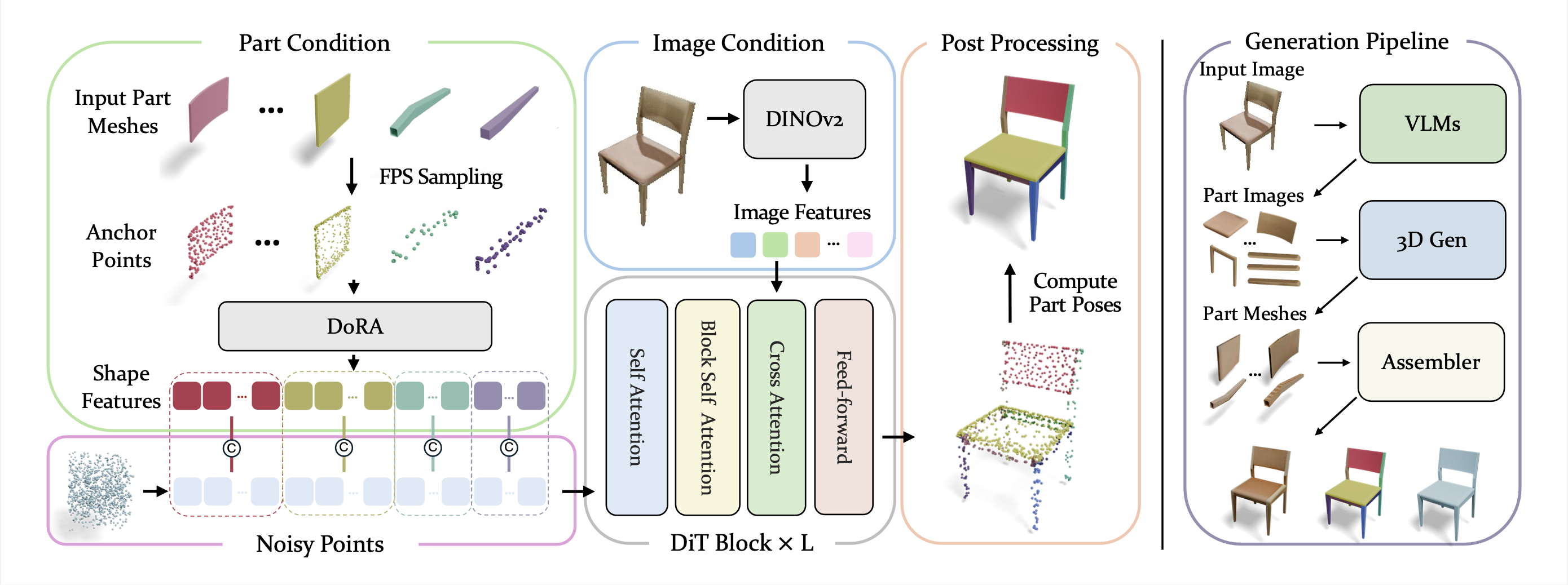

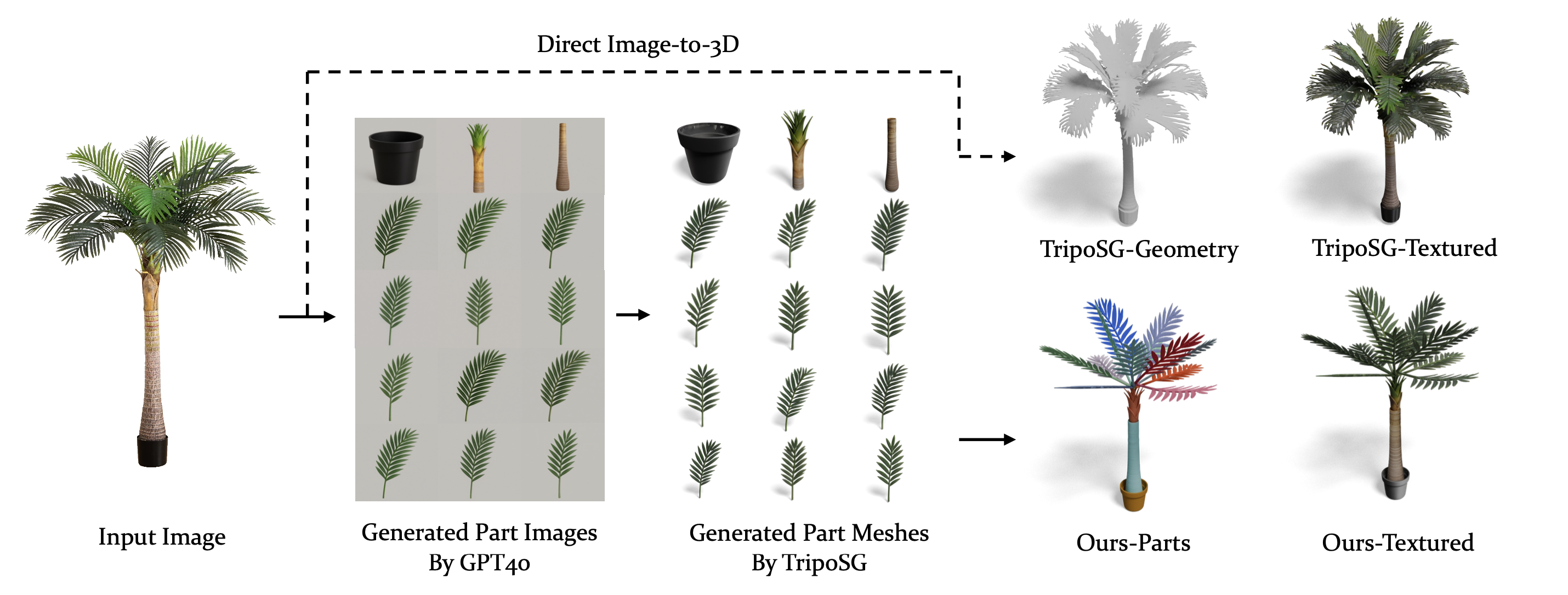

Given 3D part meshes and a condition image, Assembler can effectively assemble them into a complete 3D object. Parts are labeled in different colors.

Given 3D part meshes and a condition image, Assembler can effectively assemble them into a complete 3D object. Parts are labeled in different colors.

1ARC Lab, Tencent PCG 2VAST 3Tsinghua University

SIGGRAPH ASIA 2025

Given 3D part meshes and a condition image, Assembler can effectively assemble them into a complete 3D object. Parts are labeled in different colors.

Given 3D part meshes and a condition image, Assembler can effectively assemble them into a complete 3D object. Parts are labeled in different colors.